Money In, Project Out: How AI Will Disrupt Development Finance

Why solar projects are like decision trees — Unbundling the development capital stack — Towards a common language of risk

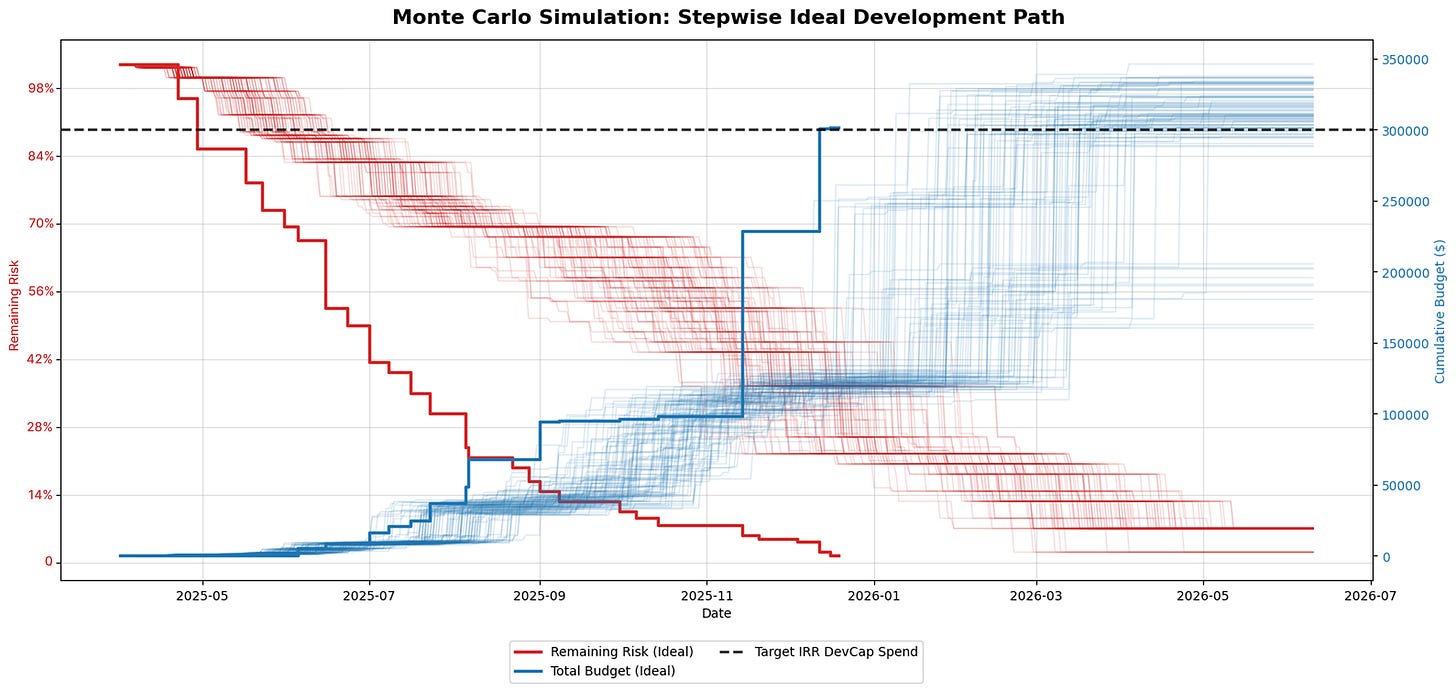

What you're looking at in this image are 500 simulations of solar project development pathways in upstate NY.

Last spring, a small group of us at Paces set off to create a model that captured the inherent uncertainty of building energy infrastructure. Our approach was to build a standardized development plan from first principles, then inject some randomness into a simple algorithm and let it go nuts:

Reduce the greatest amount of risk with the least amount of capital, as quickly as possible.

This process not only produced a Monte Carlo visualization, which is always fun, but deepened my understanding of the project development process and led me to believe that AI will likely "unbundle" the early-stage development capital stack over time.

In our model, three core variables drive each simulation:

Risk: the likelihood the project will fail, which decreases as critical milestones within interconnection, permitting, etc., are reached

Budget: total development spend on the project, which increases over time as more advanced studies and deposits are required to reach later milestones

Target Internal Rate of Return (IRR): the threshold where development expenses make project returns insufficient for investors in the project or portfolio

These metrics are distillations of very complex processes and reflect real-world trade-offs developers face all the time. And they interact in surprising ways.

Risk

Development risk materializes in two ways: either the project fails to be completed outright, or getting it built becomes so expensive that it doesn't pencil.

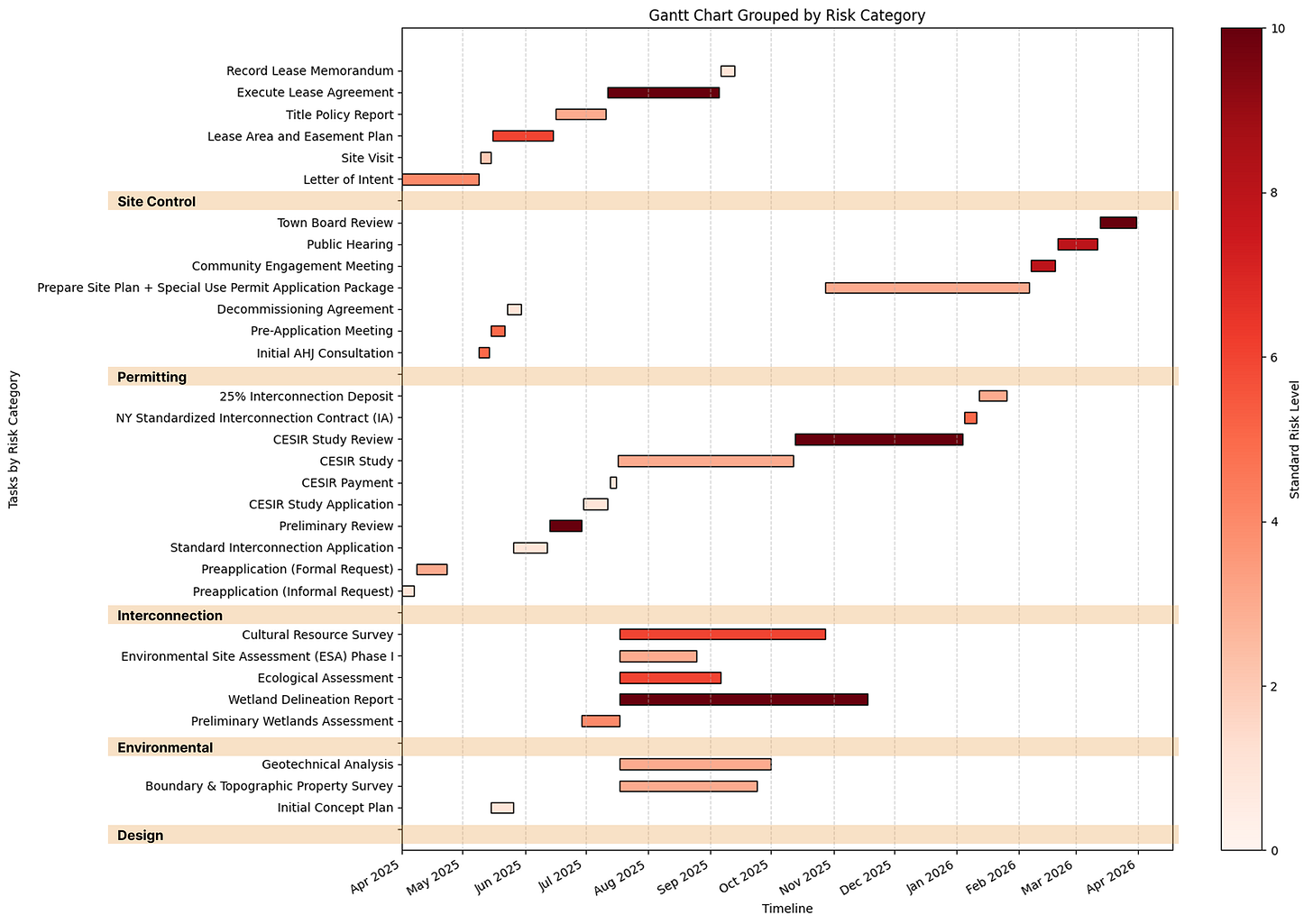

Risk is driven by several interrelated "swimlanes", such as permitting (getting cleared by regulators), interconnection (connecting to the grid), site control (acquiring rights to the land), etc. Each swimlane contains key milestones that must be completed to reach Notice to Proceed (NTP).

Achieving milestones directly reduces risk, where the risk reduced is proportional to the frequency with which projects fail at that milestone. For example, signing an Interconnection Agreement with the utility or obtaining Planning Board approval for a Special Use Permit are significant milestones that take large chunks of risk off the table. A project with no milestones left means it has been fully de-risked and has a near 100% probability of getting built

Budget

However, achieving milestones also requires money, whether via paying consultants, application fees, and deposits, or funding a team to move the project along. If the project site has particular attributes that cost a lot to develop (i.e., extra environmental studies to assess the impact on some famous Threatened Turtle), then it must be factored into the development budget.

At the same time, developers must be wary of milestones that don't necessarily cost a lot to achieve but still increase the capital requirements of the project. For example, if the site design requires a longer path to interconnect with the grid to avoid an uncooperative landowner, it increases equipment + construction costs.

Finally, developers must be mindful of conversion rates and take into account resources spent developing projects in their pipeline that ultimately do not get to NTP. Baked into the expense of developing a successful project is the deadweight of promising sites that didn't make it.

IRR

In turn, project or portfolio budgets depend on the return expectations of the capital provided at various stages. Early on in the development process, the spend per project is low because uncertainty and risk are high. However, as development progresses, risk is reduced as relationships with landowners, permitting authorities, and utilities are established, providing clarity into the risk levels of remaining milestones, while capital requirements increase as developers pony up for expensive milestones like land options, field studies, and interconnection deposits. The cost of capital directly follows risk, as the equity dollars used early in the process give way to debt for the least risky stuff at the end, like construction financing.

What this means throughout is that developers are constantly maintaining a financial model to ensure DevEx and CapEx line up with return expectations for each investor in their capital stack. Risk assessment is often bucketed at common financing checkpoints, where its form and level of scrutiny depend on whether the financial transaction is occurring with a third party or an internal investment committee. Regardless, the name of the game is to "fail fast" by disqualifying unworkable projects early and forecasting total dev spend as quickly and accurately as possible.

The Middle Capital Problem

What we've seen across all development types is that the middle financing stage is the most complex, where project costs tend to escalate sharply while high degrees of risk still remain. My boss at Paces James McWalter has highlighted that this "middle capital problem" is particularly acute in datacenter development right now: lots of money spent taking early land positions, lots of money ready to be deployed for nearly complete projects, but not a lot ready to churn through messy intermediate stages.

As such, middle stages are often characterized by fancy mezzanine / hybrid loans with an option to buy structures to help get the capital needed to push projects through rather than "die on the vine".1

The Development Plan

To illustrate the dynamics of risk, budget, and investor expectations, we mapped out each milestone required to reach NTP for a representative community solar project in NY across 5 major swimlanes. We then surveyed developers and civil engineers to set parameters for timelines, budgets, and risk for each milestone in our standardized development plan. Finally, we made the share of risk held per milestone and swimlane vary based on specific characteristics so we could cluster sites into common "archetypes".

Sites closer to wetlands, for example, should usually require more extensive field studies or site design adjustments and would be mapped to the "wetlands" archetype. This means that achieving wetland milestones by completing delineations and critical habitat assessments reduces a greater proportion of risk than other steps in the development process. Therefore, if you were to maximize risk reduction and minimize capital spend, the optimal development plan would suggest shifting environmental diligence earlier in the process. This is how one would produce the steepest decline in risk over time, as shown in the header image.

How does AI fit into all this?

From the outset, I noticed our model is pretty similar to a common machine learning algorithm: decision trees. Decision trees learn from multidimensional datasets by asking yes/no questions and "splitting" on one variable at a time. It’s like 20 questions turned into a flowchart (which is essentially a development plan). At each split, it chooses the variable that reduces the greatest amount of "entropy" in the system, or in other words, maximizes information gained. Similarly, at each step in the development process, our algorithm chooses the activity that reduces the greatest amount of risk.

Overall, this initiative helped our team

Truly understand the development process and

Adjust the near-term product roadmap of our site scoring algorithm to cluster sites by archetype

However, what I'm most excited about our approach is how it stands to benefit from perf improvements in LLMs over time, enabling us to predict project success or failure more accurately.

This is the holy grail of data science 🤝 development

Throughout my career, one of the most difficult data science challenges has been building models in low-quality data environments. I've always been jealous of my peers working on LMP modeling, as price forecasting benefits from nice, clean datasets where they can easily map inputs to outputs and leverage supervised learning algorithms. Yet for predicting project success, there is no empirical fossil record of what happened to every project that was attempted and didn't make it, limiting our ability to learn from data.

LLMs allow us to widen our toolbox beyond induction by leveraging their ability to reason at scale. If AI agents are set loose on publicly available yet unstructured data and told to "predevelop" sites according to a standardized development plan, then they can do much of the heavy desktop research (finding and verifying data, generating rough site designs, crafting outreach emails) upfront.

The best way to find out whether a project is viable has always been to just get started developing the project. The problem, however, is that this is a very manual process and takes knowledge/energy/time on behalf of the developer, making it prohibitively expensive (in time, and time is money).

But if this work can be automated... what will be its effect on the industry?

Coase’s “Theory of the Firm” and transaction costs

In econ parlance, what it would do is reduce soft costs and transaction costs, allowing developers to move more quickly, fail faster, and reduce portfolio deadweight, enabling humans to focus almost exclusively on human work. The cost savings would materialize as lower development expenses and therefore higher project returns.

In simple terms: more MW and more $$$ per human = more capital and more projects.

Yet one thing we noticed in our research is how prominent transaction costs are in development finance, and it is interesting to consider how AI can help here as well. If a developer is seeking third-party financing for some expensive milestones, it requires work on behalf of both sides to come to terms, diligence the sites, and "speak the same language" with respect to risk. Large vertically integrated Independent Power Producers (IPPs) do not have this challenge because they work through established internal processes within their firm. But what happens if AI lowers the cost of diligencing sites and allows developers and financial partners to reduce transaction costs?

The economist Ronald Coase observed that firms emerge when the cost of organizing production through the price mechanism (discovering prices, negotiating contracts, etc.) exceeds the cost of organizing production within a firm. Essentially, firms allow for more efficient coordination by replacing market transactions with internal management.

It would stand to reason that if AI lowers the cost of "organizing production," which in this case means diligencing sites, verifying documents, etc. then we should see transaction costs decline, reducing the advantage of working within one financial entity. Ideally, this would enable developers and capital providers to sort through different flavors and risk profiles more quickly and nimbly, thereby becoming more efficient and further increasing project returns

The tumltulous post-IRA world of partially developed projects will be ruled by the most creative developers and investors, and putting teams of AI agents at their disposal to pre-develop sites and standardize project risk assessment makes me optimistic we’ll be able distinguish signal from noise faster and ultimately get tons of shit built.

The full whitepaper can be found here.

As an aside, Varietals of “Development Capital” by Segue Infrastructure is a wonderful and seminal overview on all types of development capital, from the simple to complex, and had a huge influence on my thinking at the outset of this research.